This post started out as a comment on the MAYA (Most Advanced Yet Acceptable) design concept but this somehow got conflated into a ramble on the place of OpenSim in the world of educational VR. My apologies…

The context: how we got here

It seems to be that every 10 years or so virtual reality (VR) hits the headlines and then subsequently fades from view during a so-called virtual world winter. In 2007 it was the hype surrounding Second Life (SL) that spawned many imitators, including OpenSim which is open source but to a degree content-compatible with the commercial SL. We talked of immersive environments then but were largely focused on the desktop display.

In 2017, however, it is Head-Mounted Displays (HMDs) that are in the limelight, inspired by the 2015 acquisition of Kickstarter project Oculus by Facebook for $2bn. Hot on the heels of the Oculus Rift we have the likes (in no particular order) of Google Daydream, HTC Vive, Samsung Gear VR, the PSVR and whatever Microsoft delivers in terms of mixed and augmented reality. Never before have so many Big Players been engaged but even so there is pragmatism among the industry hype. Gabe Newell of Valve is "comfortable" with VR being a "complete failure" (though, of course, he expects the opposite).

The advent of the Big Players, of course, is part of the problem. Everyone wants a piece of the action if only through Fear of Missing Out. The Big Players have deep pockets and get most attention but are constrained by their very scale, running the risk of being blind-sided by smaller, more nimble competitors as the technology evolves and fresh thinking emerges. Beyond the devs and early adopters, the consumer is irked by high ticket prices, variable quality content and the suspicion that technology churn is going to lead to an evolutionary deadend and buyer's remorse. A few recall Google's 6-month excursion into desktop VR in the form of Google Lively back in 2008.

Standards such as OpenXR may afford some much-needed interoperability in due course but these are early days.

The perspective: looking to future through successful design concepts of the past

Of course, everyone is sure that what will deliver a return on their investment is "the experience" but nobody is quite sure what that will be. Games? Education? Virtual tourism? Beyond games and apps, will we see packaged experiences as in Sansar or something more like a virtual world? Will Facebook Rooms dominate social VR by default?

It was noticeable that senior figures interviewed at the recent GDC were reluctant to identify the "killer app" of VR, one preferring to talk pragmatically (that word again) of multiple domain-specific "hero apps" instead.

The three principles

An article on industry website Upload VR attempts to bring some order to this uncertainty by inviting folk to look back at design principles that have proven useful in the past.

The first, The Message of the Medium, reminds us that we are still at an early stage and may not be using VR appropriately. Moreover, the principle also encompasses a "known unknown", the likelihood that we won't know what any new technology is good for until we've advanced beyond the preconceptions inherited from its predecessor.

In this regard the most awesome feature of virtual worlds is also their primary weakness, namely that almost anything can be developed there (and it's implicitly multi-user) but that more often than not there is a simpler way to achieve the same end-result. Indeed, our affinity for phones and apps depends on the work of super-smart folk reducing the friction involved in one particular task to levels of comfort amenable to mass adoption. This is a tough "ask" for amateur developers in virtual worlds although the phenomenon of Minecraft encourages us to see the user as the developer and a highly engaged one at that.

The second principle, MAYA (Most Advanced Yet Acceptable), was formulated by Raymond Loewy:

Our desire is naturally to give the buying public the most advanced product that research can develop and technology can produce. Unfortunately, it has been proved time and time again that such a product does not always sell well. […] The adult public’s taste is not necessarily ready to accept the logical solutions to their requirements if the solution implies too vast a departure from what they have been conditioned into accepting as the norm.

So what most people are comfortable with is significant but incremental progress rather than an apparently unconsidered leap into a technology they didn't know they needed (Apple might be an exception). You can make a boring product more acceptable by giving it a modest degree of novelty (a shiny surface) but may have to tone down features if you seek mass-market appeal from a revolutionary product.

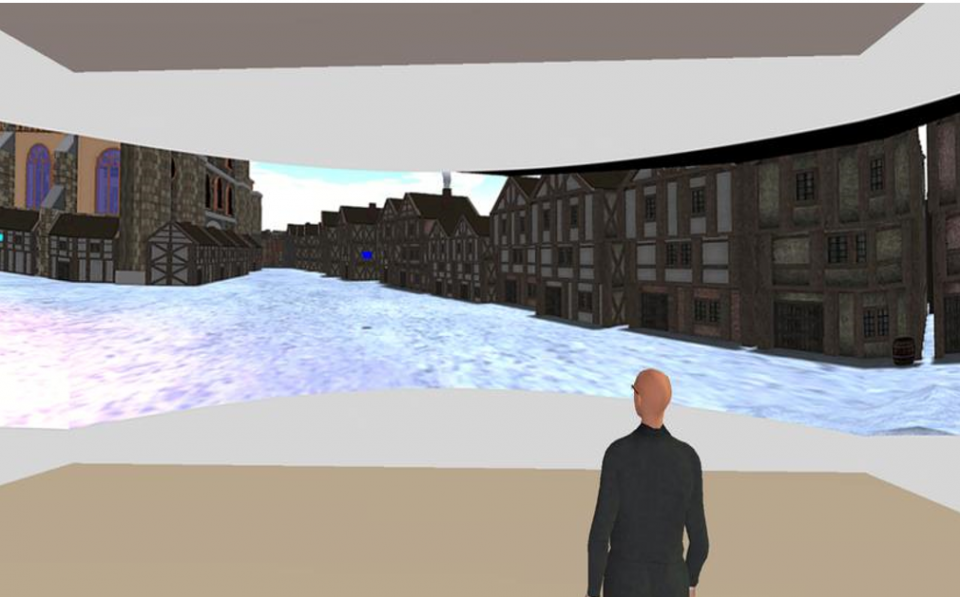

The third and final principle is Create comfort, then move on, i.e. work from a basis of familiarity using an interface that mimics reality. Perhaps the educational experience needs to start from the familiar, the campus library, the laboratory, the lecture theatre, even if it subsequently branches away once the students have found their "wings". This approach of cloning the campus was derided (a lot) back in 2007.

What does this mean for VR and for OpenSim?

The new developments in VR based on improbable levels of investment encourage us to view OpenSim through the lens of a deficit model ("what it can't do") rather than focusing on what we can use its very rich feature set to achieve or, at worst, prototype.

The deficit model is a siren call to add new features that will "make the difference" and lead to mass-adoption. If the principles above have value, however, they suggest that we need instead to simplify the experience, not for the benefit of the devs and early adopters, but for the average user, both in terms of the viewer and the inworld build. The Outworldz DreamWorld installer points in this direction with the (potentially configurable) OnLook viewer and its own library of OAR-based experiences. An educational edition would be a very interesting development (there is some educational content already).

However, we need always to ask why we are using OpenSim and to be open to novel possibilities that emerge as we work with a sim. For example, if I'm interested in learning about the circular economy, why not script multi-component objects that provide a service (a simplified self-driving car, perhaps) but remain the property of the supplier, that can be shared, that age, can be refurbished, and have their components recycled and cascaded? Then get students to participate in the economy, both as creators and consumers.

In short, make things simple (enough) and make them for a reason.

So let's be positive

In conclusion, the OpenSim ecosystem is wonderfully rich and diverse yet for the most part coherent in a way that standards bodies will find hard to impose on Big Players. My belief is that its potential remains largely unexplored or at least underexploited. Encouragingly, its use is not predicated on significant (and recurrent) investment in new kit. It can, however, be used with phone-based Google Cardboard HMDs via the Lumiya viewer should that be a requirement1. It is, of course, open source and extensible.

Let's not pretend all is perfect with OpenSim and that it can replicate the totality of the fascinating work currently underway in HMD-mediated VR. On the other hand the marginal benefits of the HMD approach need to be balanced against cost at scale. This argues, perhaps, in favour of important but niche applications for HMDs rather than general adoption. The consequence may be loss of coherence as multiple diverse acquisitions are made across large institutions.

Meanwhile, back in the real world there are things OpenSim educators can do to help themselves. While the community sometimes bemoans the lack of core developers, what is more crucial as far as educators are concerned is a network to support the development and distribution of innovative educational applications. Kay McLennan's recent establishment of an Educator Commons is a valuable step in the right direction and hopefully more will follow.

My personal belief is that the key to the future of educational use of OpenSim lies in getting beyond the deficit mindset and making the most of the current potential. If we do that, the future will be more likely to look after itself.

Early session at the OpenSim Community Conference 2016